Performance Tips

Using bulk addition can provide very substantial performance gains in appropriate cases. Generally, it is best used when you are adding a large number of records to a file and has a more noticeable effect on files with a large number of records.

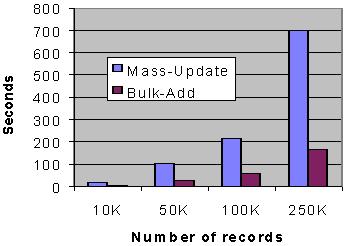

The following chart shows one set of execution times for creating a new file with eight 10-byte keys generated in random order and a 130-byte record size.

Note that the times will vary widely from machine to machine and between different file organizations; use these number just as a comparison between techniques:

Notice how the run times for MASS-UPDATE mode rise at a much steeper rate than those for bulk addition mode as the number of records grows.

You get best performance from bulk addition when you maximize the number of records being keyed at once. This means that you want to WRITE as many records as possible to the file without performing any other intervening operations on the file.

There are two configuration variables that have an important effect on the bulk addition performance. The first of these is V_BUFFERS , which determines the number of 512-byte blocks in the Vision cache. Besides having its usual caching effect, the Vision cache is especially important when you are doing bulk addition because the cache is used to gather file blocks together into larger groups that are written out in a single call to the operating system. While the cache always does this, the bulk addition algorithm tends to produce very large sets of adjacent modified blocks, which can all be written out at once. By increasing the cache size, you can increase the number of blocks written out at once.

For this reason, you should use a cache size of at least 64 blocks. Note that this is the default cache size for most systems. If memory is plentiful, then a cache size of 128 or 256 blocks is recommended. You can go higher if you want; however, there is usually little benefit seen after about 512 blocks (256K of memory).

The other important factor is the memory buffer used to hold the keys ( V_BULK_MEMORY ). Unlike V_BUFFERS, this is most useful when it is large. The default size is 1 MB. Larger settings will improve performance when you are adding many records to a file. Essentially, this buffer is used to hold record keys. The more keys that it can hold, the better the overall performance. For runs that will write out between 250,000 and 500,000 records, a setting of 4 MB generally works well. For more than 500,000 records, we recommend at least an 8 MB setting. Be careful that you do not set this too large, however. If you set it so large that the operating system must do significantly more memory paging to disk, you could lose more performance than you gain. You will need to experiment to see which setting works best for your system.

Finally, the process of removing records due to illegal duplicate keys is expensive. You should try to arrange it so that bulk addition is used in cases where illegal duplicate keys are rare.